Implementing a single GraphQL across multiple data sources

Publikováno: 19.8.2021

(This is a sponsored post.)

In this article, we will discuss how we can apply schema stitching across multiple Fauna instances. We will also discuss how to combine other GraphQL services and data sources with Fauna in one graph.…

The post Implementing a single GraphQL across multiple data sources appeared first on CSS-Tricks. You can support CSS-Tricks by being an MVP Supporter.

(This is a sponsored post.)

In this article, we will discuss how we can apply schema stitching across multiple Fauna instances. We will also discuss how to combine other GraphQL services and data sources with Fauna in one graph.

What is Schema Stitching?

Schema stitching is the process of creating a single GraphQL API from multiple underlying GraphQL APIs.

Where is it useful?

While building large-scale applications, we often break down various functionalities and business logic into micro-services. It ensures the separation of concerns. However, there will be a time when our client applications need to query data from multiple sources. The best practice is to expose one unified graph to all your client applications. However, this could be challenging as we do not want to end up with a tightly coupled, monolithic GraphQL server. If you are using Fauna, each database has its own native GraphQL. Ideally, we would want to leverage Fauna’s native GraphQL as much as possible and avoid writing application layer code. However, if we are using multiple databases our front-end application will have to connect to multiple GraphQL instances. Such arrangement creates tight coupling. We want to avoid this in favor of one unified GraphQL server.

To remedy these problems, we can use schema stitching. Schema stitching will allow us to combine multiple GraphQL services into one unified schema. In this article, we will discuss

- Combining multiple Fauna instances into one GraphQL service

- Combining Fauna with other GraphQL APIs and data sources

- How to build a serverless GraphQL gateway with AWS Lambda?

Combining multiple Fauna instances into one GraphQL service

First, let’s take a look at how we can combine multiple Fauna instances into one GraphQL service. Imagine we have three Fauna database instances Product, Inventory, and Review. Each is independent of the other. Each has its graph (we will refer to them as subgraphs). We want to create a unified graph interface and expose it to the client applications. Clients will be able to query any combination of the downstream data sources.

We will call the unified graph to interface our gateway service. Let’s go ahead and write this service.

We’ll start with a fresh node project. We will create a new folder. Then navigate inside it and initiate a new node app with the following commands.

mkdir my-gateway

cd my-gateway

npm init --yesNext, we will create a simple express GraphQL server. So let’s go ahead and install the express and express-graphqlpackage with the following command.

npm i express express-graphql graphql --saveCreating the gateway server

We will create a file called gateway.js . This is our main entry point to the application. We will start by creating a very simple GraphQL server.

const express = require('express');

const { graphqlHTTP } = require('express-graphql');

const { buildSchema } = require('graphql');

// Construct a schema, using GraphQL schema language

const schema = buildSchema(`

type Query {

hello: String

}

`);

// The root provides a resolver function for each API endpoint

const rootValue = {

hello: () => 'Hello world!',

};

const app = express();

app.use(

'/graphql',

graphqlHTTP((req) => ({

schema,

rootValue,

graphiql: true,

})),

);

app.listen(4000);

console.log('Running a GraphQL API server at <http://localhost:4000/graphql>');In the code above we created a bare-bone express-graphql server with a sample query and a resolver. Let’s test our app by running the following command.

node gateway.jsNavigate to [<http://localhost:4000/graphql>](<http://localhost:4000/graphql>) and you will be able to interact with the GraphQL playground.

Creating Fauna instances

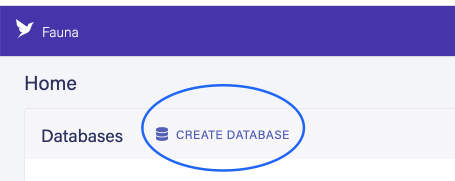

Next, we will create three Fauna databases. Each of them will act as a GraphQL service. Let’s head over to fauna.com and create our databases. I will name them Product, Inventory and Review

Once the databases are created we will generate admin keys for them. These keys are required to connect to our GraphQL APIs.

Let’s create three distinct GraphQL schemas and upload them to the respective databases. Here’s how our schemas will look.

# Schema for Inventory database

type Inventory {

name: String

description: String

sku: Float

availableLocation: [String]

}# Schema for Product database

type Product {

name: String

description: String

price: Float

}# Schema for Review database

type Review {

email: String

comment: String

rating: Float

}Head over to the relative databases, select GraphQL from the sidebar and import the schemas for each database.

Now we have three GraphQL services running on Fauna. We can go ahead and interact with these services through the GraphQL playground inside Fauna. Feel free to enter some dummy data if you are following along. It will come in handy later while querying multiple data sources.

Setting up the gateway service

Next, we will combine these into one graph with schema stitching. To do so we need a gateway server. Let’s create a new file gateway.js. We will be using a couple of libraries from graphql tools to stitch the graphs.

Let’s go ahead and install these dependencies on our gateway server.

npm i @graphql-tools/schema @graphql-tools/stitch @graphql-tools/wrap cross-fetch --save

In our gateway, we are going to create a new generic function called makeRemoteExecutor. This function is a factory function that returns another function. The returned asynchronous function will make the GraphQL query API call.

// gateway.js

const express = require('express');

const { graphqlHTTP } = require('express-graphql');

const { buildSchema } = require('graphql');

function makeRemoteExecutor(url, token) {

return async ({ document, variables }) => {

const query = print(document);

const fetchResult = await fetch(url, {

method: 'POST',

headers: { 'Content-Type': 'application/json', 'Authorization': 'Bearer ' + token },

body: JSON.stringify({ query, variables }),

});

return fetchResult.json();

}

}

// Construct a schema, using GraphQL schema language

const schema = buildSchema(`

type Query {

hello: String

}

`);

// The root provides a resolver function for each API endpoint

const rootValue = {

hello: () => 'Hello world!',

};

const app = express();

app.use(

'/graphql',

graphqlHTTP(async (req) => {

return {

schema,

rootValue,

graphiql: true,

}

}),

);

app.listen(4000);

console.log('Running a GraphQL API server at http://localhost:4000/graphql');As you can see above the makeRemoteExecutor has two parsed arguments. The url argument specifies the remote GraphQL url and the token argument specifies the authorization token.

We will create another function called makeGatewaySchema. In this function, we will make the proxy calls to the remote GraphQL APIs using the previously created makeRemoteExecutor function.

// gateway.js

const express = require('express');

const { graphqlHTTP } = require('express-graphql');

const { introspectSchema } = require('@graphql-tools/wrap');

const { stitchSchemas } = require('@graphql-tools/stitch');

const { fetch } = require('cross-fetch');

const { print } = require('graphql');

function makeRemoteExecutor(url, token) {

return async ({ document, variables }) => {

const query = print(document);

const fetchResult = await fetch(url, {

method: 'POST',

headers: { 'Content-Type': 'application/json', 'Authorization': 'Bearer ' + token },

body: JSON.stringify({ query, variables }),

});

return fetchResult.json();

}

}

async function makeGatewaySchema() {

const reviewExecutor = await makeRemoteExecutor('https://graphql.fauna.com/graphql', 'fnAEQZPUejACQ2xuvfi50APAJ397hlGrTjhdXVta');

const productExecutor = await makeRemoteExecutor('https://graphql.fauna.com/graphql', 'fnAEQbI02HACQwTaUF9iOBbGC3fatQtclCOxZNfp');

const inventoryExecutor = await makeRemoteExecutor('https://graphql.fauna.com/graphql', 'fnAEQbI02HACQwTaUF9iOBbGC3fatQtclCOxZNfp');

return stitchSchemas({

subschemas: [

{

schema: await introspectSchema(reviewExecutor),

executor: reviewExecutor,

},

{

schema: await introspectSchema(productExecutor),

executor: productExecutor

},

{

schema: await introspectSchema(inventoryExecutor),

executor: inventoryExecutor

}

],

typeDefs: 'type Query { heartbeat: String! }',

resolvers: {

Query: {

heartbeat: () => 'OK'

}

}

});

}

// ...We are using the makeRemoteExecutor function to make our remote GraphQL executors. We have three remote executors here one pointing to Product , Inventory , and Review services. As this is a demo application I have hardcoded the admin API key from Fauna directly in the code. Avoid doing this in a real application. These secrets should not be exposed in code at any time. Please use environment variables or secret managers to pull these values on runtime.

As you can see from the highlighted code above we are returning the output of the switchSchemas function from @graphql-tools. The function has an argument property called subschemas. In this property, we can pass in an array of all the subgraphs we want to fetch and combine. We are also using a function called introspectSchema from graphql-tools. This function is responsible for transforming the request from the gateway and making the proxy API request to the downstream services.

You can learn more about these functions on the graphql-tools documentation site.

Finally, we need to call the makeGatewaySchema. We can remove the previously hardcoded schema from our code and replace it with the stitched schema.

// gateway.js

// ...

const app = express();

app.use(

'/graphql',

graphqlHTTP(async (req) => {

const schema = await makeGatewaySchema();

return {

schema,

context: { authHeader: req.headers.authorization },

graphiql: true,

}

}),

);

// ...When we restart our server and go back to localhost we will see that queries and mutations from all Fauna instances are available in our GraphQL playground.

Let’s write a simple query that will fetch data from all Fauna instances simultaneously.

Stitch third party GraphQL APIs

We can stitch third-party GraphQL APIs into our gateway as well. For this demo, we are going to stitch the SpaceX open GraphQL API with our services.

The process is the same as above. We create a new executor and add it to our sub-graph array.

// ...

async function makeGatewaySchema() {

const reviewExecutor = await makeRemoteExecutor('https://graphql.fauna.com/graphql', 'fnAEQdRZVpACRMEEM1GKKYQxH2Qa4TzLKusTW2gN');

const productExecutor = await makeRemoteExecutor('https://graphql.fauna.com/graphql', 'fnAEQdSdXiACRGmgJgAEgmF_ZfO7iobiXGVP2NzT');

const inventoryExecutor = await makeRemoteExecutor('https://graphql.fauna.com/graphql', 'fnAEQdR0kYACRWKJJUUwWIYoZuD6cJDTvXI0_Y70');

const spacexExecutor = await makeRemoteExecutor('https://api.spacex.land/graphql/')

return stitchSchemas({

subschemas: [

{

schema: await introspectSchema(reviewExecutor),

executor: reviewExecutor,

},

{

schema: await introspectSchema(productExecutor),

executor: productExecutor

},

{

schema: await introspectSchema(inventoryExecutor),

executor: inventoryExecutor

},

{

schema: await introspectSchema(spacexExecutor),

executor: spacexExecutor

}

],

typeDefs: 'type Query { heartbeat: String! }',

resolvers: {

Query: {

heartbeat: () => 'OK'

}

}

});

}

// ...Deploying the gateway

To make this a true serverless solution we should deploy our gateway to a serverless function. For this demo, I am going to deploy the gateway into an AWS lambda function. Netlify and Vercel are the two other alternatives to AWS Lambda.

I am going to use the serverless framework to deploy the code to AWS. Let’s install the dependencies for it.

npm i -g serverless # if you don't have the serverless framework installed already

npm i serverless-http body-parser --save

Next, we need to make a configuration file called serverless.yaml

# serverless.yaml

service: my-graphql-gateway

provider:

name: aws

runtime: nodejs14.x

stage: dev

region: us-east-1

functions:

app:

handler: gateway.handler

events:

- http: ANY /

- http: 'ANY {proxy+}'Inside the serverless.yaml we define information such as cloud provider, runtime, and the path to our lambda function. Feel free to take look at the official documentation for the serverless framework for more in-depth information.

We will need to make some minor changes to our code before we can deploy it to AWS.

npm i -g serverless # if you don't have the serverless framework installed already

npm i serverless-http body-parser --save Notice the highlighted code above. We added the body-parser library to parse JSON body. We have also added the serverless-http library. Wrapping the express app instance with the serverless function will take care of all the underlying lambda configuration.

We can run the following command to deploy this to AWS Lambda.

serverless deployThis will take a minute or two to deploy. Once the deployment is complete we will see the API URL in our terminal.

Make sure you put /graphql at the end of the generated URL. (i.e. https://gy06ffhe00.execute-api.us-east-1.amazonaws.com/dev/graphql).

There you have it. We have achieved complete serverless nirvana 😉. We are now running three Fauna instances independent of each other stitched together with a GraphQL gateway.

Feel free to check out the code for this article here.

Conclusion

Schema stitching is one of the most popular solutions to break down monoliths and achieve separation of concerns between data sources. However, there are other solutions such as Apollo Federation which pretty much works the same way. If you would like to see an article like this with Apollo Federation please let us know in the comment section. That’s it for today, see you next time.

The post Implementing a single GraphQL across multiple data sources appeared first on CSS-Tricks. You can support CSS-Tricks by being an MVP Supporter.